2020, July 20

David Sloan Wilson interview with Tim O’Reilly

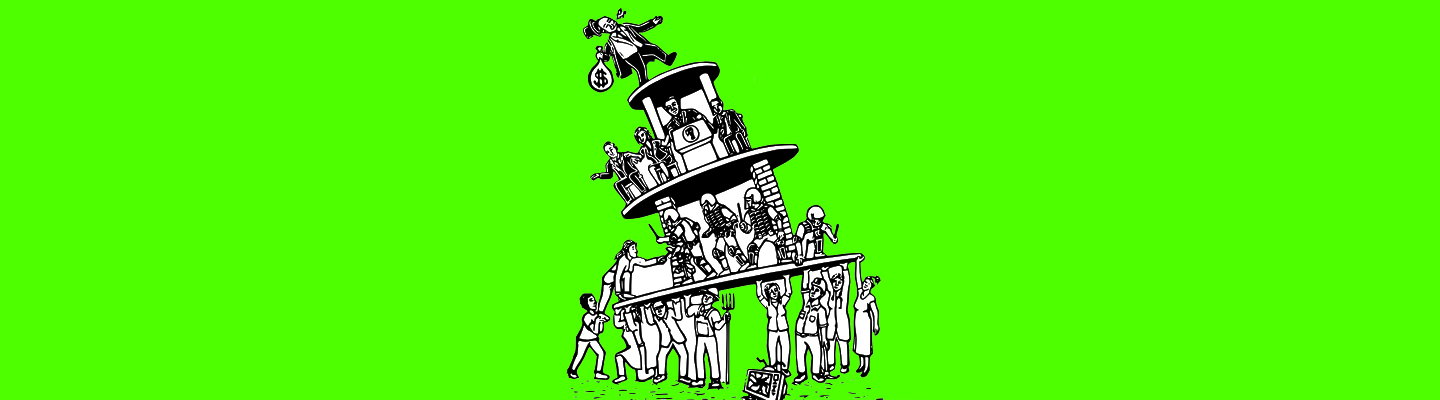

The thesis of the Third Way is that when it comes to positive social change, all three ingredients of an evolutionary process—the target of selection, variation oriented around the target, and the identification and replication of best practices–must be managed at a systemic scale. This is in contrast to the two dominant models of social change, laissez-faire and centralized planning. Laissez-faire doesn’t work because it simply is not the case that the lower-level pursuit of self-interest robustly benefits the common good. Centralized planning doesn’t work because the world is too complex for a group of experts to formulate and implement a grand plan.

The phrase “The Internet Age” loosely describes our current period of human history that—for better or for worse—has become dominated by electronic communication. It can be said to start with the invention of the telegraph, followed by radio, television, the internet, and the World Wide Web. The Internet Age has a strong self-organizing feel to it as if it just emerges from bottom-up processes without needing to be selected as a whole system. If so, then it would pose a challenge to the thesis of the Third Way.

Get Evonomics in your inbox

But appearances can be deceiving and Tim O’Reilly is the perfect person to serve as our guide to the Internet Age. With an educational background in the classics, he became a technical writer and then a technical book publisher, conference producer, and online information provider. In 1992, his company published the bestselling Whole Internet User’s Guide and Catalog, which sold over a million copies and introduced the World Wide Web to a broader audience, covering it as the next big thing when there were only 200 websites. In 1993, his company created GNN (the Global Network Navigator), the first commercial, ad-supported website, which he sold to AOL in 1995 as one of the earliest transactions in what was to become the dotcom boom. He has become well known for convening and popularizing technology trends, including open source software, “web 2.0,” big data, the Maker Movement, and “government as a platform.” Today, he continues to play a central role through O’Reilly Media and his own writing, including his cleverly titled trade book WTF? What’s the Future and Why It’s Up to Us.

DSW: Greetings, Tim! Thanks for joining this important series of conversations. In grand Internet Age style, we have grown to know each other’s work quite well, even though we have only physically met once. An earlier conversation between us on Evonomics.com explores connections between your WTF? and my This View of Life: Completing the Darwinian Revolution. Please introduce yourself to our audience in your own words, realizing that while some will already know you well, others will be “meeting” you for the first time. How did an aspiring classics scholar become a Guru of the Internet Age?

TO’R: I tend to think of myself as a bit of an accidental entrepreneur. I didn’t want a “regular job,” but I had a family that I needed to support, so in 1977, when a computer programmer friend who did contract work got offered a three-month job writing a manual that he wasn’t quite comfortable pulling off, I offered to help him. (I was just commencing my first book, a study of science-fiction writer Frank Herbert, and so I was comfortable as a writer.) We eventually went into partnership together, on the premise that pairing a programmer and a writer was a way to get better manuals. My friend never did get comfortable writing and I became enamored with technology, so we broke up the partnership in 1983 and I carried the business forward on my own. I noticed that as software became more standardized, many of my consulting clients were asking for versions of the same manual, so I came up with the idea of retaining the rights and then customizing for each new client. Then, in 1985, we had a downturn in our consulting business (evolutionary pressure!) and came up with the survival strategy of turning some of the manuals we’d written into commercial books. The rest is history.

We went on to be the publisher of choice on many of the technologies that fueled the internet revolution. In 2000, the cover of Publisher’s Weekly read “The Internet Was Built With O’Reilly Books,” and everyone knew that was true. I had quite a few internet billionaires tell me that they’d started their company with the help of one of our books. But we also tried lots of different things: we launched the first ad-supported website and the first PC-based web server; we launched an ebook aggregation service in 2001 that grew into what is now the O’Reilly online learning platform.

And we always had an ecosystem view of everything we did. One of our mottoes has always been “Create more value than you capture.” We have had this notion that we have to be part of a bigger system, that our role is to create value for others and not just for ourselves. And we’ve tried to tell stories about the interconnectedness of technologies and the people behind them – that’s how we became involved in promoting big ideas like the commercial internet, open source software, big data and cloud computing (which were the key ideas behind “Web 2.0”), the maker movement, and the movement to improve government’s use of technology.

DSW: Thanks! Right away, we can see that you and your associates implemented Third Way thinking. You had a systemic target of selection, were fearless about trying out new things, and had the capacity to replicate what works. Can this be generalized? In other words, can positive aspects of the Internet Age be understood as a managed process of cultural evolution with systemic goals in mind? Can negative aspects be understood in terms of either laissez-faire or centralized planning?

TO’R: I have a lot of sympathy for the idea of evolutionary economics, but I’m not sure I’m all in on the “managed process” idea. A “designed process” might be a better word.

DSW: Let’s pause right here to explore the difference between these two words, “managed” and “designed”, in your own mind. I know from previous conversations in this series (especially with David Colander) that the same words have different associations for the many people who are being brought together by this series.

TO’R: –To me, “management” suggests an active process in which decisions are made on an ongoing basis by a central actor. “Design” suggests that someone builds something that operates according to specific rules, and then steps away, with the things that follow being a consequence of that design. Of course, the two work together – someone designed an airplane, but someone has to manage its flight – it doesn’t fly on its own. But without the right design, it won’t fly no matter how hard you try to manage it.

DSW: OK, I get that the word “management” does have a top-down feel to it, so I’m happy to work with what you understand as “design”.

TO’R: If you look at both the internet and successful open source projects, they have what I call “an architecture of participation.” Linux, one of the flagship open source projects, is essentially a re-implementation of Unix, the operating system originally designed by Ken Thompson and Dennis Ritchie at Bell Labs. Unlike competing operating systems that were tightly tied to particular hardware, Unix was designed to run on a variety of machines. As might be expected of a system developed by a telecommunications company, the heart of the system were the rules by which programs worked together. Thompson and Ritchie wrote a small operating system kernel that could easily be “ported” to other machines than the one that they originally developed it for, and then other researchers at AT&T and around the world wrote various programs that ran on top of it, using its system services and following its rules.

TCP/IP, the communications protocol that underlies the internet, came from the same evolutionary heritage and acted much the same way. I’ve always loved the way that when he wrote the standard for TCP, the Transmission Control Protocol, Jon Postel defined something called “the robustness principle” for enabling interoperability between two networked systems. In a later paper questioning whether the robustness principle was always correct, Eric Allman, the creator of sendmail, the leading open source email routing program, described it beautifully:

In 1981, Jon Postel formulated the Robustness Principle, also known as Postel’s Law, as a fundamental implementation guideline for the then-new TCP. The intent of the Robustness Principle was to maximize interoperability between network service implementations, particularly in the face of ambiguous or incomplete specifications. If every implementation of some service that generates some piece of protocol did so using the most conservative interpretation of the specification and every implementation that accepted that piece of protocol interpreted it using the most generous interpretation, then the chance that the two services would be able to talk with each other would be maximized. Experience with the Arpanet had shown that getting independently developed implementations to interoperate was difficult, and since the Internet was expected to be much larger than the Arpanet, the old ad-hoc methods needed to be enhanced.

When I began my open source software advocacy in the 1990s, I argued that this design feature was far more important than the software licensing rules that formally defined open source software. I observed that the original development of Unix (which had been the environment in which I received my introduction to computing) had all the features of collaborative, long-distance development of pieces that somehow magically just worked together that were celebrated as the result of open source software licenses had occurred under a liberal but still proprietary software license. Meanwhile, there were projects like Sun’s OpenOffice or the first open source release of Mozilla, Netscape’s open source browser (now Firefox), that didn’t seem to have similar success in attracting independent developers and fostering innovation. And all the efforts to hire open source community managers and developer advocates didn’t change that. Netscape’s didn’t really take off as an open source project until it was re-architected as a system designed for participation.

The web too was designed for participation. Its core was its protocol, HTTP, and its language for representing web pages, HTML. Everything else was up for grabs. As long as your web server or web browser spoke and understood HTTP and HTML, it didn’t matter how you implemented them.

In a way, these projects were all the result of a fundamental evolutionary advance, perhaps not dissimilar (though clearly not as significant in the overall scheme of things) to the advance that brought us multicellular life, or lungs rather than gills, or flowers and seeds rather than spores.

This was a deep design innovation in the way things work, and it allowed software to reproduce more successfully, so to speak. Software tightly tied to an individual hardware architecture and operating system was limited by the spread of its hardware. The IBM PC architecture and Microsoft’s DOS and then Windows operating systems outcompeted both IBM’s mainframes and DEC’s VAX/VMS operating system, but Unix/Linux and the Internet eventually subsumed both, because they could run on any host.

But no one set a managed goal here, other than “we want these things to work together.” That’s kind of at the level of the DNA of the thing. It’s not clear how that DNA forms in the first place – humans certainly create it but through a set of selection pressures that make them first invent and then adopt a new pattern. Were all the advances of civilization managed processed? I doubt it. I think they were discovered through selection pressure, and the managed process takes over after that.

Ilya Sutskever, the co-founder and chief scientist at OpenAI made a really interesting comment in his recent podcast conversation with Lex Fridman: “For any kind of capability like active learning, the thing that it really needs is a problem. It needs a problem that requires it. It’s very hard to do research about the capability if you don’t have a task because then what’s going to happen is you will come up with an artificial task.” That’s why, to my mind, the target of selection is something you discover, not something you come up with. After it’s been discovered, you can design a system around the target. But it’s hard to come up with without the external selection pressure. I guess what I’m saying is that the selection pressure precedes the target of selection, in a kind of circular way, and that only later is it possible for the target to come first.

So in the case of the internet and open source design pattern, the problem to be solved – of interoperability – drove the initial design, but the discovery of a successful solution, whether it was email routing, or the Domain Name System, or the World Wide Web was then the foundation for an intense competition to take it further. But the point was that because of design, the selection pressure ended up “preferring” systems that kept the evolutionary game going. So for example, you could see early proprietary information networks like Compuserve, AOL, and the Microsoft Network as less evolutionarily fit because they were too tightly managed, and the managers, so to speak, put up barriers to innovation, while the “internet” simply said, “compete all you like, as long as you continue to work together.”

I do think that, once discovered, an architecture of participation, a design for things to work together, is a superior adaptation than a design for things that aim for monopoly, that aim to drive out competing systems. And by recognizing the evolutionary advantages of that kind of system, designers can build better systems. But I don’t think that they “manage” those systems; they just design them in a way that is more evolutionarily fit. Although I guess there is a kind of management in not screwing it up from there!

But that doesn’t necessarily persist forever. Just like the natural world, things can get out of balance, especially if one player in the ecosystem becomes dominant enough to start to rewrite the design in its favor.

For example, Google was originally a child of the web. The web was a new evolution of the architecture of participation for computers, one that enabled humans who weren’t specially trained in computer programming to share content with each other. But the scale of the content created on the web had led to a breakdown in the ability to find it. Systems like GNN (the site we created at O’Reilly) and Yahoo! (which followed in our footsteps but executed more successfully) tried to manually curate catalogs of links. Google found a way to build systems that remembered and learned from the choices humans made – what sites did they link to? with what words to tell people what the link was about? and who clicked on them? how often? — and hundreds of other factors that allowed them to find what people really want and send them on their way. They turbocharged the architecture of participation that underlay the web. But once they became dominant, they decided that it was better to satisfy the queries with Google’s own answers. This perhaps gives short term evolutionary advantage (as demonstrated by Google’s continually increasing revenues and profits) but to critics like me, this looks like the same kind of extractive growth that is created by cutting down the rainforest or burning fossil fuels. It’s also the kind of behavior that led to Microsoft’s initial dominance of the computer industry, and then their downfall, because they got stuck on one fitness peak, suppressed any innovation that didn’t come from themselves, so it was forced to happen outside their ken. Of course, later, Microsoft came to understand what they missed, and now, perhaps, they are doing as good a job as anyone in the tech industry of trying to keep innovation going by creating value for others and not just for themselves.

I find the metaphor of extractive versus sustainable systems to be very powerful.

So let me try to translate all of this rambling into a response to your assertion that “The target of selection must be a systemic goal, such as a sustainable equitable economy.” I’d rather frame that as saying that we’re hitting a point where our current economy is failing us, creating a natural selection pressure to find something different, just as the new environment of networked (as opposed to standalone) computers created the need for interoperability. The inability of our current capitalist “free market” economy to respond to challenges like climate change creates selection pressure, which leads humans to recognize it as a problem to be solved. We don’t yet know how to solve it, but we’re starting to have glimmers. My son-in-law Saul Griffith is writing a book explaining why electrification of everything is the way to go and suggesting policy and financial frameworks that will provide a systems architecture that will allow governments and businesses to reach that goal.

Once we have found an evolutionarily superior solution, it will outcompete the others. At that point, it’s perhaps possible to say that “Variation must be oriented toward the target of selection,” but even then, if you have the courage of your convictions, you will see that variation WILL be oriented towards the target of selection once the right solution framework has been found.

In the computer industry, the open source and internet design pattern outcompeted the proprietary standalone software design pattern, and almost inevitably became the target of selection for entrepreneurs.

But it is a kind of punctuated equilibrium, both because new problems arise and because entrepreneurs don’t fully understand the principles that underly their success.

In this sense, we have to not only design better frameworks but also understand why they are better, and when they cease being better (less well adapted to current circumstances.)

I’m not sure I’m completely understanding your premises, so take all of the above with a grain of salt. I hope this is a good start to a deeper conversation, though!

DSW: Wow—there is so much to unpack here! First, I appreciate the way that you are already seeing things in nuanced evolutionary terms. My job at this point is to formalize what you are saying a bit and relate it to the theme of the Third Way. I agree with you that evolution—both genetic and cultural—has a huge unpredictable and unintended component, especially when it comes to major innovations. For genetic evolution, we can point to examples such as multicellular life, or lungs rather than gills, or flowers and seeds rather than spores as you put it. The origin of our species can be added to this list. In some respects, we are just another ape species, but in other respects, we are a new evolutionary process (cultural evolution) with world-changing unforeseen consequences. Many aspects of the Internet Age can be understood in the same way.

Throughout human history—not just the Internet Age–we find people deliberately striving to accomplish certain goals, so this is one aspect of cultural evolution that we need to understand. Then these intentional strivings interact with each other and environmental forces, with unforeseen and unpredictable consequences. In this fashion, human life consists of many inadvertent social experiments, some that hang together and others that fall apart. My conversation with Peter Turchin covers this ground for the last 10,000 years of human history.

Against this background, allow me to playback what you have covered for the Internet Age in my own words. First with Unix and then Linux, we have a group of people with the explicit goal of creating an operating system that runs on a variety of machines. That was their target of selection, which structured their formulation of “rules by which programs can work together”. Those rules didn’t self-organize in the absence of a target of selection and required a lot of experimentation to get right.

TO’R: Yes, that sounds right to me.

DSW: Most of what I know about Linux and other open source development processes is based on the book The Success of Open Source by Steven Weber. The fact that many people can independently contribute to the process is amazing and speaks to the “variation” part of the Third Way. Even more amazing is the quality control that is required to make sure that all of the pieces of code are compatible and to prevent “forking”—lineages of code that are internally compatible but incompatible with other lineages. Sometimes forking is a good thing but mostly it needs to be avoided. The comparison with speciation in genetic evolution is apt, as Weber appreciates. The quality control speaks to the “selection” and “replication” part of the Third Way. Thus, the success of Linux and other open-source development processes are great examples of the Third Way.

I can’t resist comparing this with theory development in science, such as theories of social evolution. In this case, the target of selection is the creation of knowledge. The peer review process provides the quality control: If a manuscript doesn’t take the previous literature into account and make its own novel contribution, then it shouldn’t be published. The peer review process works to a degree but isn’t nearly as rigorous as the code vetting process in open source software development. As a result, the equivalent of forking occurs quite often—models that invent new terms (examples include “social selection” and “fitness interdependence”) without taking the previous literature into account and without adding much to what was already established using different terms. This is a serious problem for models of social evolution (go here for more).

Returning to the Internet Age, once an “an architecture of participation” was created by a Third Way process, it opened up a whole new adaptive radiation of applications. Each application was created by a Third Way process—has there ever been an application that did not have an explicit target of selection requiring a lot of experimentation to reach? But the resulting profusion of applications was primarily a laissez-faire process resulting in many unforeseen dysfunctional outcomes at larger systemic scales. The only solution to this problem is to implement a Third Way process at those larger scales. I think this is close to your own way of putting it: “I think they were discovered through selection pressure, and the managed process takes over after that.”

Here are two examples that you stress and elaborate upon in your highly informative Quartz article , which you link to above. The trend toward monopolization. You might be right that an architecture of participation is superior to a design that aims for monopoly—but that doesn’t prevent monopolies from taking over—again and again—as documented by Tim Wu in his book The Master Switch: The Rise and Fall of Information Empires. To avoid this dysfunctional outcome, we must design a system that is monopoly-proof. Such a system must be the deliberate target of selection.

TO’R: I’m not sure that “monopoly-proof” is possible. One of the insights that drove me from “open source” towards “web 2.0” was the idea that the history of the computer industry could be seen as a cycle of evolutionary success turning something that was formerly scarce into a commodity, at which point something else becomes valuable. So I could see that IBM had controlled the computer industry by its monopoly on a hardware architecture, but when (due to the selection pressure from the invention of the microcomputer) it created its own version, the IBM PC, which became so successful and widespread that it provided a fertile environment landscape for innovative software companies to evolve. One of those companies, Microsoft, realized the evolutionary advantage of dominating a software layer – a standard operating system that ran on all those PCs — and took control of the industry from IBM. But then, they used that control for their own advantage, not leaving enough opportunity for others to innovate. So software developers went elsewhere — to open source software and the internet — even though it wasn’t clear how to make money there. When I watched the growth of that new environment, I started to ask myself if the pattern was going to repeat.

Early in 2003, Dave Stutz, who’d been leading Microsoft’s open source efforts, left the company, and in a remarkable open letter, made the case that Microsoft needed to adapt to the way that software was being commoditized by open source. That clicked in with what I’d been thinking about the IBM-Microsoft power transition and left me asking “If software became a new source of monopoly when hardware becomes a commodity, what becomes the source of monopoly when software becomes a commodity?” I came to the conclusion that it was going to be data.

About a year after that, I was giving a talk at the Open Source Business Conference about this topic, and met Clayton Christensen, who was there to talk about what he called “The Law of Conservation of Attractive Profits.” And of course, we realized we were both talking about the same thing.

So as a result of seeing this as a kind of Hegelian cycle, I try to advise the peak predators of each age against optimizing for their own short term advantage, and instead to try to keep the game going longer. Sustainability in business doesn’t just mean environmental sustainability in the traditional sense. It means leaving enough opportunity for everyone, so you don’t inadvertently create selection pressure for entrepreneurs to leave the current system and look for opportunities elsewhere.

Unfortunately, though I think Microsoft gets that idea now, I don’t think Google will wake up to it until they find themselves on the downslope of power as the evolutionary advantage (or in economic terms, the opportunity) has moved elsewhere.

DSW: Again, I think we largely agree. We certainly agree that monopolies are only good for themselves over the short term, not to the larger system or even themselves over the long term. If there is a solution to the problem at all, it includes convincing the monopolies to take a larger systemic view of their own interests. Even then, however, there would need to be oversight and sanctions against bad actors at the whole system scale. That’s all I meant by “monopoly proof” and it is never total. Let’s remember that after a billion years of evolution, multicellular organisms are still not cancer-proof!

The second example that you stress is the problem of short-term extractive growth. I love the way that you compare Google’s obsession with its own growth to cutting down the rainforest and burning fossil fuels. That’s the problem with laissez-faire in all its forms. It is simply profoundly untrue that lower-level agents pursuing their narrow interests—whether an individual, a small firm, or a leviathan like Google—robustly benefit the common good. Most of our modern pathologies are of this sort, as Anthony Biglan documents in a series of TVOL articles for the tobacco industry, the arms industry, the food industry, Big Pharma, and the fossil fuel industry (start here). At the beginning of this conversation, you said that “we always had an ecosystem view of everything we did.” That makes you and your associates prosocial by nature, but the global system must be well protected against agents that are not like you and, as with monopolies, this must be a deliberate target of selection. This is the conclusion that you also reach in your Quartz article when you say:

Given this understanding of the role of a platform, regulators should be looking to measure whether companies like Amazon or Google are continuing to provide an opportunity for their ecosystem of suppliers, or if they’re increasing their own returns at the expense of that ecosystem.

My own part in this conversation is growing too long, but I have one more important observation to make before turning it back to you. It is easy to imagine lower-level agents (large or small) as selfish when they pursue their narrow interests at the expense of the ecosystem upon which everyone’s long-term welfare depends. But selfish intent is only sometimes the case. Albert Camus put this beautifully in his novel The Plague:

The evil in the world comes almost always from ignorance, and goodwill can cause as much damage as ill-will if it is not enlightened…There is no true goodness or fine love without the greatest possible degree of clear-sightedness.

In other words, Google could be sincere about its old code of conduct (“Don’t be evil”) or new code of conduct (“Do the right thing”) but still do harm because they are misled by the myth of the invisible hand. If Google and other leviathans became convinced about the need for the Third Way, they could quickly become part of the solution rather than part of the problem because they would be seeing the world through the lens of the right theory. Do you think this might be possible? Or am I being naively optimistic?

TO’R: The problem with “Don’t be evil” was that it was a values statement. That takes you only so far. What we really need is a theory of what is going to be better for the company as well as for others.

I used to argue with various people who were dogmatic about open source licenses that we didn’t want open source to be a religion. We wanted it to be a science. We wanted to understand what worked and why it worked.

In some sense, economic theories, whether the neoliberal version of the invisible hand or something pro-social like Kate Raworth’s Doughnut Economics or your evolutionary economics, aim to be scientific theories rather than religious systems. They often get derided for this – economics is referred to as “the dismal science” — but that’s rather like calling astronomy a dismal science in the age of Ptolemy because he didn’t correctly understand orbital mechanics, or in the age of Kepler and Newton because they didn’t understand quantum mechanics.

It is certainly true that economics will never be a “hard science” like physics or chemistry, but as you have argued in your books, human behavior extends from biological foundations and can be better understood in terms of evolutionary theory. I like to think that there will be a time, perhaps with the aid of AI, when we will have a better understanding of how to design economies that are both productive and innovative (i.e. continue to evolve) but that are also stable and resilient.

Even now, there’s a lot of evidence that when companies make a systematic effort to understand how to create value not just for themselves, and not even just for themselves and their customers, but for themselves, their employees, their customers, their suppliers, their competitors, and the society around them, they will build longer-lasting and ultimately more successful businesses.

DSW: I’m glad that you mentioned Raworth’s work as an approach to economics with the welfare of the global system in mind. And I couldn’t agree with you more about the need for science in the service of values. But I have been wanting to stress something throughout this conversation—that evolution is based on relative, not absolute fitness, as Robert Frank points out in his book The Darwin Economy. It doesn’t matter how well an organism survives and reproduces in absolute terms, only relative to other organisms in its vicinity. Likewise, the reason a business executive buys a $1000 suit is to look better than a rival in a $500 suit. In some economic environments, a business with a sense of enlightened self-interest can lose in competition with a business that is more narrowly self-interested. This is why appeals to altruism or enlightened self-interest can actually be injurious. If you declaw an alley cat and put him back into the alley, you haven’t done him a favor. You also need to change the alley! More generally, it is possible to do well by doing good, but only in a system that is designed to reward prosocial behavior and detect and punish more narrowly self-serving strategies that would otherwise have the relative fitness advantage over the short term. How do you factor that into your advice to the tech giants to do the right thing for their own good?

TO’R: That’s a very good point. But that’s exactly why I talk about the architecture of the system. Typically, a monopoly isn’t defeated by a competitor, but by a fundamental change in the environment. That environmental change can be a natural one (as we’re seeing right now with the radically changed conditions favoring online retail over in-person retail as a result of the COVID-19 pandemic) or from technology evolution.

But if we look at the computer industry, as we have seen, we see how those changed conditions have to be systemic. IBM’s dominance of the computer industry wasn’t upended by another company that filled the same ecological niche but by IBM’s inadvertent release of a computer made from commodity parts, which led to a fundamental change in the structure of the industry (that is, the environment.) Companies then responded to the selection pressure. Many used their old toolkit. They believed that the axis for competition was better hardware, just as it had been in the earlier era. But a new species of company found advantage in developing software that ran on anyone’s hardware.

Microsoft emerged as the apex company in the PC era, but they too were threatened by the fundamental change in the environment, to network-centric computing.

As I’ve described earlier, the architecture of Unix and the Internet were better suited to that environment, so companies that relied on that architecture outcompeted those who held onto old architectures and business models.

But this still doesn’t get me to your idea that you have to start with the target of selection. I think you have to start with observing and deeply engaging with changes in the environment, and THEN you can hypothesize about a target of selection that will help you outperform.

So, for example, when the internet took off, my idea was that data would be the new source of competitive advantage. The internet wasn’t designed that way. It was a side-effect of the new environment. But companies that recognized that earlier than others, and investors that saw it, could place bets aligned with it.

So there is eventually a target of selection, but it isn’t pulled out of a hat. It’s a hypothesis about what will give an evolutionary advantage. And that hypothesis is tested in the evolutionary give-and-go of the marketplace.

There are those of us who have the theory that being pro-social is ultimately the best economic strategy, but we may well be wrong. There are lots of evolutionary dead-ends, and our economies may be stuck in one of them.

That being said, to paraphrase Martin Luther King, “the arc of history is long, but it bends towards evolutionary fitness.” I was reminded of this when reading James O’Toole’s wonderful book, The Enlightened Capitalists, about business pioneers who tried to do well by doing good. It starts with the story of Robert Owen, a Horatio Alger figure who took over a failing mill and was horrified by the conditions there. He took the children out of the factory and set up a school for them. He reduced working hours and offered healthcare to his workers. His New Lanark Mills prospered. Once they did so, his investors pushed him back towards the old extractive model. He found new investors, bought out his first set, and continued to prosper. Eventually, though, he entered into a political campaign to end child labor, and the entire property-owning class turned on him. But eventually, the world caught up with his ideas.

We’re in a moment now when the pro-social, labor-friendly innovations of the post-war period have been rolled back because we have been told that favoring corporate profits and shareholder value are the most effective (think “evolutionarily adaptive”) way to run an economy, but after forty years of that experiment, the evolutionary feedback system is telling us more and more urgently that that systemic experiment is wrong.

New evolutionary pressures require us to create a new systemic paradigm within which companies and markets can operate. As you suggest, pro-social, cooperative systems may be the target of selection. But I think we have to hold that belief loosely. I don’t think you impose a target of selection; you discover it. The ultimate target of selection is to find those behaviors that are most robust in the current environment and to persuade others to embrace them.

DSW: I’d like to end by giving you the opportunity to stress some of the themes from your book WTF? What’s the Future and Why It’s Up to Us that haven’t already been covered in our conversation. For example, I recall you describing Artificial Intelligence (AI) algorithms as evolutionary processes operating at warp speed that can be a force for good—as long as they are actively oriented toward human welfare goals. Otherwise, they become part of the problem. I also recall you describing the positive potential of the gig economy and the amazing ability of the tech giants to get things done—as long as they focus on the global common good as the target of selection rather than their current more limited goals. It’s as if the Internet Age is only one step away from a benign future—that step being the appropriate target of selection.

TO’R: The key point of my book is that economists and the creators of public policy have a lot to learn from the technology industry. In a lot of ways, the big tech companies are managing economies of their own, and so they are a laboratory for how things can work in the 21st century. And we’re seeing a recapitulation of the usual evolutionary process, with a lot of successes and a lot of dead-ends, and with companies forgetting in their age what they knew instinctively in their youth, and then, if my reading is right, eventually being overtaken by companies that have learned their lesson or got it right and stuck to it.

We’re seeing this story play out right now, I think, with Google and Microsoft. Microsoft was originally aligned with the personal computer architecture, which enabled distributed competition, and they understood that their goal was to build a system that helped others to succeed. But then they got greedy and tried to take over all the valuable ecological niches. Google was originally aligned with the internet architecture of participation and cooperation and understood that their job was to send traffic to others and to help them succeed. But then they got greedy and tried to take over all the valuable ecological niches…

Meanwhile, Microsoft, having learned its lesson, under CEO Satya Nadella has tried to return to its original mission of enabling others, with great success.

The question I try to take up in my book is why it is so hard for companies to stick to their ideals even in the face of the evidence that it is better for them long term if they do so.

And there, I come to the idea that our economy is designed with what I call a fitness function (or sometimes an objective function or optimization function), in much the same way as Google’s search algorithms are, or Facebook’s news feed.

Algorithmic systems all have this. It’s close to what you call “the target of selection.” Google’s fitness function for search used to be “show people what will make them go away satisfied.” Now, it’s become “show people what will make people be satisfied with what we, Google, show them, so they don’t have to go somewhere else.” They tell themselves that their goal was always to give people what they want as quickly, and they are still aligned with that goal, but in fact, they missed the fact that for any environment to be robust, it needs lots of participants. Monocultures are not resilient and they are not adaptive.

But I digress. We can see pretty clearly that Facebook had the wrong target of selection or fitness function for its algorithms. They believed that if they showed people content that they engaged with, it would bring them closer together. We now know that they were wrong, and they know that they were wrong, and they are trying to learn from the responses and adapt their algorithms.

I jump from that to the idea that our markets are also designed systems, with algorithms that have an objective function that we now know to be wrong. I make the case in the fourth part of the book (after exploring how tech platforms, algorithms, and AI work and how they can go wrong) that starting in the 1980s, we built an algorithmic economy focused on increasing shareholder value. It was a reasonable hypothesis at the time, a time of high inflation, a cycle of ever-increasing labor costs and consumer prices. It changed that dynamic for sure. But after forty years, we’ve seen the downsides, just as Facebook has seen the downsides of their algorithms.

But unlike Facebook, our policymakers pretend that “the market” is a natural phenomenon, rather than a designed system with rules (a tax code, for instance) and incentives (like the alignment of CEO pay with share price) that is just as much under our control as Facebook’s algorithms are under its management team.

The big takeaway for me is that we desperately need to rewrite those rules. I make the point that AI may not be the achievement of an intelligence separate from us, but rather, a networked hybrid of human and machine intelligence. In this sense, Google, Facebook, and our financial markets are already hybrid AIs, harnessing and directing the intelligence of billions of humans. And of all of these, our financial markets are the master AI, the ruler of all the others. And increasingly, that AI has been given the instruction to treat humans as a cost to be eliminated. This is very dangerous for our long-term survival.

But the other lesson of tech is that even when you have a very powerful hypothesis about the world, you don’t bet everything on it. Adaptation is not something that you do once. You’re in a constant dialogue with your environment. Because of course, everything you do changes that environment, and if you keep doing what was once adaptive, it becomes less so over time.

I do think that there is an overall target of selection that we should be looking for, and I agree with you that human flourishing is a big part of that. But the devil is in the details. For a long time, we thought that human flourishing meant ever more access to cheap disposable consumer goods.

Everything we do is an experiment in adaptation. We have to keep at it.

DSW: That’s close enough to Third Way thinking for me! I’m delighted to include this conversation in the series.

Originally published from the Third Way of Entrepreneurship series at This View of Life

Read the full Third Way of Entrepreneurship series

Donating = Changing Economics. And Changing the World.

Evonomics is free, it’s a labor of love, and it's an expense. We spend hundreds of hours and lots of dollars each month creating, curating, and promoting content that drives the next evolution of economics. If you're like us — if you think there’s a key leverage point here for making the world a better place — please consider donating. We’ll use your donation to deliver even more game-changing content, and to spread the word about that content to influential thinkers far and wide.

MONTHLY DONATION

$3 / month

$7 / month

$10 / month

$25 / month

You can also become a one-time patron with a single donation in any amount.

If you liked this article, you'll also like these other Evonomics articles...

BE INVOLVED

We welcome you to take part in the next evolution of economics. Sign up now to be kept in the loop!