By Lixing Sun

Rational choice theory lies in the heart of neoclassical economics. It assumes that humans are utility maximizers, always going for the option with the highest net benefit. Since the 1970s, however, behavioral economists have poked so many holes in the theory that pronouncing its death now feels like flogging a dead horse. Can we save rational choice theory and revitalize neoclassical economics? If so, how? Here is a 2-point proposal as an attempt to infuse evolutionary ideas into our economic thinking.

“Rational” Is Relative

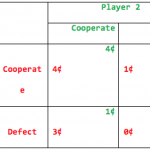

Consider revenge and retaliation. Why would we often engage in such spiteful actions ruinous for all parties involved? An early clue emerged in 1960 from a little-known economic experiment, in which college students were asked to play a repeated two-person, non-zero sum game. In the game, two players separated by an opaque screen can push two buttons, one for “Cooperate” and the other for “Defect,” with the following payoffs:

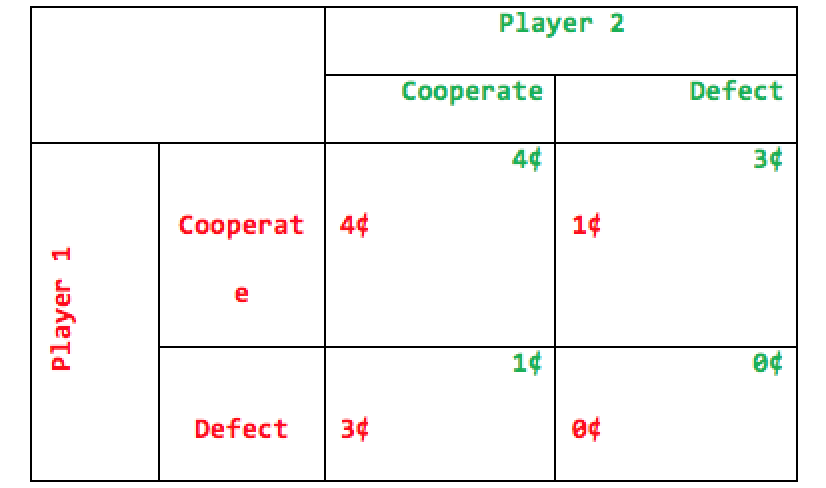

Table 1. A “Cooperation Game” with absolute payoffs

Given the payoff matrix in Table 1, what will the players do? The answer based on neoclassical economics is that they both opt for “Cooperate” because this will yield the highest profit for both (4¢ per round). Since mutual cooperation brings in a better return than any other strategic combination for both players, it appears unbeatable—a Nash equilibrium in the lingo of game theory. The game is accordingly called a Cooperation Game. Here, the paired numbers in each of the four cells are the payoffs for Player 1 and Player 2, respectively, corresponding to the strategies (“Cooperate” or “Defect”) they choose. For instance, if both players choose “Cooperate,” their payoffs are the same, 4¢, or (4¢, 4¢) in the top left cell in Table 1. (Note: 1¢ in 1960 is worth 8¢ today.)

Get Evonomics in your inbox

When real people played the game in the study, however, the result were anything but economically rational: nearly half of the players chose to defect! How can Homo sapiens stray so far from Homo economicus?

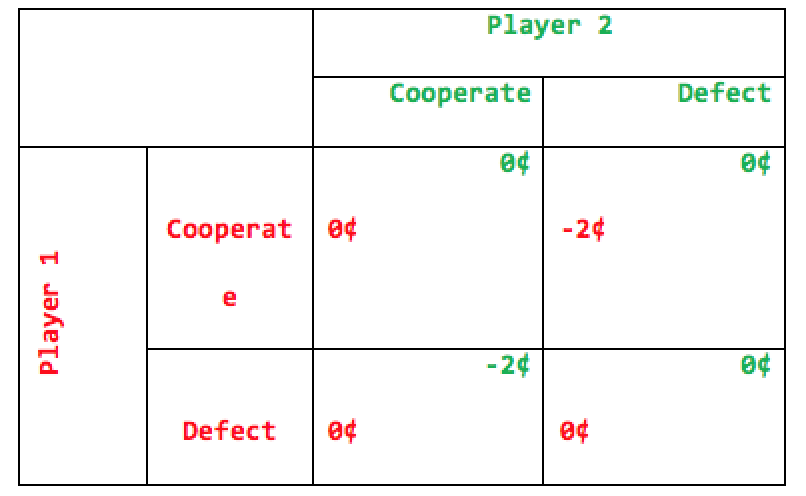

The answer lies in a hidden glitch in our reasoning process. In this economic game, what is considered “rational” in neoclassical economics is absolute payoff. The real gauge in evolution, however, is relative payoff. The difference becomes obvious if we convert absolute payoffs in Table 1 into relative payoffs—the net differences between the two players for the four payoff cells—in Table 2:

Table 2. The same “Cooperation Game” with relative payoffs

Why are we prone to playing the Spite Game? What will you play now? Table 2 shows that the best possible relative payoff for you is the same for either strategy, but if you play “Cooperate,” you risk being suckered when your partner plays “Defect”: -2¢ for you and 0¢ for your partner. So, playing “Defect” guarantees the best possible relative payoff, regardless of what your partner does. Since your partner is also a thinking Homo sapiens, you and your partner become locked in mutual defection. (Technically, “Cooperate” is weakly dominated by “Defect” for both players in this game.) As such, what begins as a seemingly Cooperation Game in Table 1—where absolute payoff is what counts—reveals its true color of what I call a Spite Game in Table 2, where it is the relative payoff that matters a great deal. (See economist Thomas Riechmann’s paper for a general treatment about this issue.)

Apparently, according to biologist David Barash’s book, The Survival Game, we often attempt to maximize the difference in payoff among peers, as though we compete to gain the upper hand in a small tribe—a milieu in which the human brain evolved. Thus, as it seems, spite isn’t evolution’s sloppy job in designing human mentality. Rather, it’s a logical consequence of evolution operating according to relative payoff.

Naturally, spite can’t be a curse for humans only. Similar behaviors have also been observed in other organisms. Bacteria, for example, manufacture toxins that kill their conspecific neighbors. Sticklebacks raid the nests and munch on the eggs of their own species. Swallows defend large territories to jeopardize the reproductive chances of their competitors. Monkeys harass mating couples in their cohort. Perhaps, that’s why humans have evolved the habit of making love in private.

Like many other organisms, we humans can gain a competitive edge by either doing better than our rivals or making our rivals do worse than us, regardless of whether both parties are better off or worse off in the absolute sense. Putting it differently, competition is about gaining relative payoff—or, relative utility in economic terms.

Clearly, one way to infuse Homo economicus with the blood of Homo sapiens is to enrich the meaning of “rational,” which should be gauged by relative utility, not absolute utility, especially when we deal with rivalries in direct competition. This will make otherwise “irrational” choices—envy, revenge, retaliation—completely rational.

Of course, relativity in payoff isn’t the entire story. Rationality also depends on how we use the time frame.

“Rational” Is a Lifetime Deal

Consider an economic Ultimatum Game, where two people, a Proposer and a Responder, are given some money, say $10 or $100. The Proposer suggests a way to split the money between them. If the Responder agrees, each keeps the proportion of the money as proposed. If the Responder rejects it, neither of them gets anything.

According to rational choice theory, the Proposer should give the Responder as little as possible, and the Responder should accept any non-zero offer from the Proposer. Experimental results from real people in industrial societies, summarized in economist Colin Camerer’s book, Behavioral Game Theory, however, are starkly different: the Proposer’s offer is typically above 30%, and the Responder rejects the Proposer’s offer about half the time when the offer falls below 20%.

Why, again, are Homo sapiens so unlike Homo economicus?

The key to the answer lies in whether we see the issue in the short or long term, in addition to using the concept of relative payoff.

The disparity between reality and rational choice theory stems mainly from the fact that most Ultimatum Game experiments are set up as one-shot deals between strangers. This setting is quite detached from the historical milieu in which our brains evolved. Despite radical cultural changes, much of our instinctual behavior today is still tuned to life in the Stone Age, when our ancestors were living in small, close-knit bands. In this ancestral environment, if you bumped into another person, it was unlikely that you would never meet him or her again. As such, our brains are largely tuned to repeated social dealings in the long run. This is not what most game theory experimenters presume in their studies.

How might a mentality tuned to such a long-term vision be favored by natural selection? One factor is that the Proposer’s generality can encourage the Responder to return the favor in the future. Such a reciprocal partnership can benefit both parties over time.

Another factor favoring a long-term perspective is reputation. A good reputation endows a halo of trustworthiness—a badge for being cooperative—to the Proposer. A person with a good reputation, as shown by mathematicians Martin Nowak and Karl Sigmund, often gets help from people for free—free in terms of not having to return the favor. As such, a good reputation earns one long-term profit in a stable community, a fact well reflected in folk wisdom in many societies—“Kindness will pay off.”

The value of reputation can explain such enigmatic human customs as dueling in Europe, “saving face” in East and South Asia, and honor killing in some Muslim communities. For instance, the ancient Chinese practiced filial piety, in which family elders were revered to a degree that they were entitled to the best living conditions—the finest food, the coziest rooms, and the highest social status—even though they were too old to work. The logic: filial piety served as the gold standard for reputation. (How can we trust a person who won’t even treat his parents or grandparents well?) Without knowing the value of reputation, the practice of filial piety makes no economic sense. Clearly, the benefit of reputation warranted the cost of sacrifice.

Today, even without conscious learning, our genes, through their expression as our instincts and intuitions, still send the old, stubborn instructions to our minds, making us behave as if we the Proposers would encounter the Responders again down the line. This subconscious—and otherwise irrational— perspective coaxes us to defy the temptation to burn bridges when we are likely to cross them again in the future. In fact, neural imaging studies show that our natural penchant for cooperation is so ingrained that when we share, donate, and act fairly, the brain areas associated with reward are mobilized. We feel happy and satisfied as a result of our prosocial actions. Apparently, evolution has equipped us with a subconscious long-term perspective that often disagrees with the neoclassical economic assumption that we maximize utility in the here and now.

Now, why do so many people who play the Responder reject a 20% or even 30% offer? One answer is our evolved sense of fairness: we often instinctively gauge our gain based on relative payoffs against our rivals. Another answer lies again in reputation. When the Responder accepts a low offer—though seemingly better than nothing—his or her reputation suffers. Knowing his or her readiness to settle for less, others in the community may take advantage of the Responder in future transactions. As a result, in the long run, accepting low offers is costly. Rejecting low offers, on the contrary, is a worthwhile short-term sacrifice that serves to prop up a long-term public image. Clearly, rational choice theory has difficulties in handling such tradeoffs between short-term losses and long-term gains.

Evolution is a blind, amoral process, only caring about organisms that can leave behind the most copies of genes in their lifetimes, regardless of whether they behave selfishly or selflessly in the short term. That is, when the ultimate evolutionary prize is lifetime fitness, being either cooperative or competitive makes no difference, for they are the two sides of the same coin, serving the same end.

Humans have long lifespans and live in stable social groups. These conditions, as biologist Robert Trivers argues, favor cooperation, which often leads to better payoffs than what individuals can accomplish alone in the long run. That’s why prosocial behaviors have thrived in humans. Accordingly, rather than maximizing the utility in every one-shot deal as assumed in rational choice theory, being rational in evolution means behaving in a way that can lead to maximum lifetime payoff.

With the two conceptual modifications—relative and lifetime payoff—in rational choice theory, neoclassical economics can be made compatible with evolution and gain traction in the real world.

2016 April 12

Donating = Changing Economics. And Changing the World.

Evonomics is free, it’s a labor of love, and it's an expense. We spend hundreds of hours and lots of dollars each month creating, curating, and promoting content that drives the next evolution of economics. If you're like us — if you think there’s a key leverage point here for making the world a better place — please consider donating. We’ll use your donation to deliver even more game-changing content, and to spread the word about that content to influential thinkers far and wide.

MONTHLY DONATION

$3 / month

$7 / month

$10 / month

$25 / month

You can also become a one-time patron with a single donation in any amount.

If you liked this article, you'll also like these other Evonomics articles...

BE INVOLVED

We welcome you to take part in the next evolution of economics. Sign up now to be kept in the loop!